Preparing .npy Data of a Model Running on GPU or CPU - CANN V100R020C20 Development Auxiliary Tool Guide (Training) 01 - Huawei

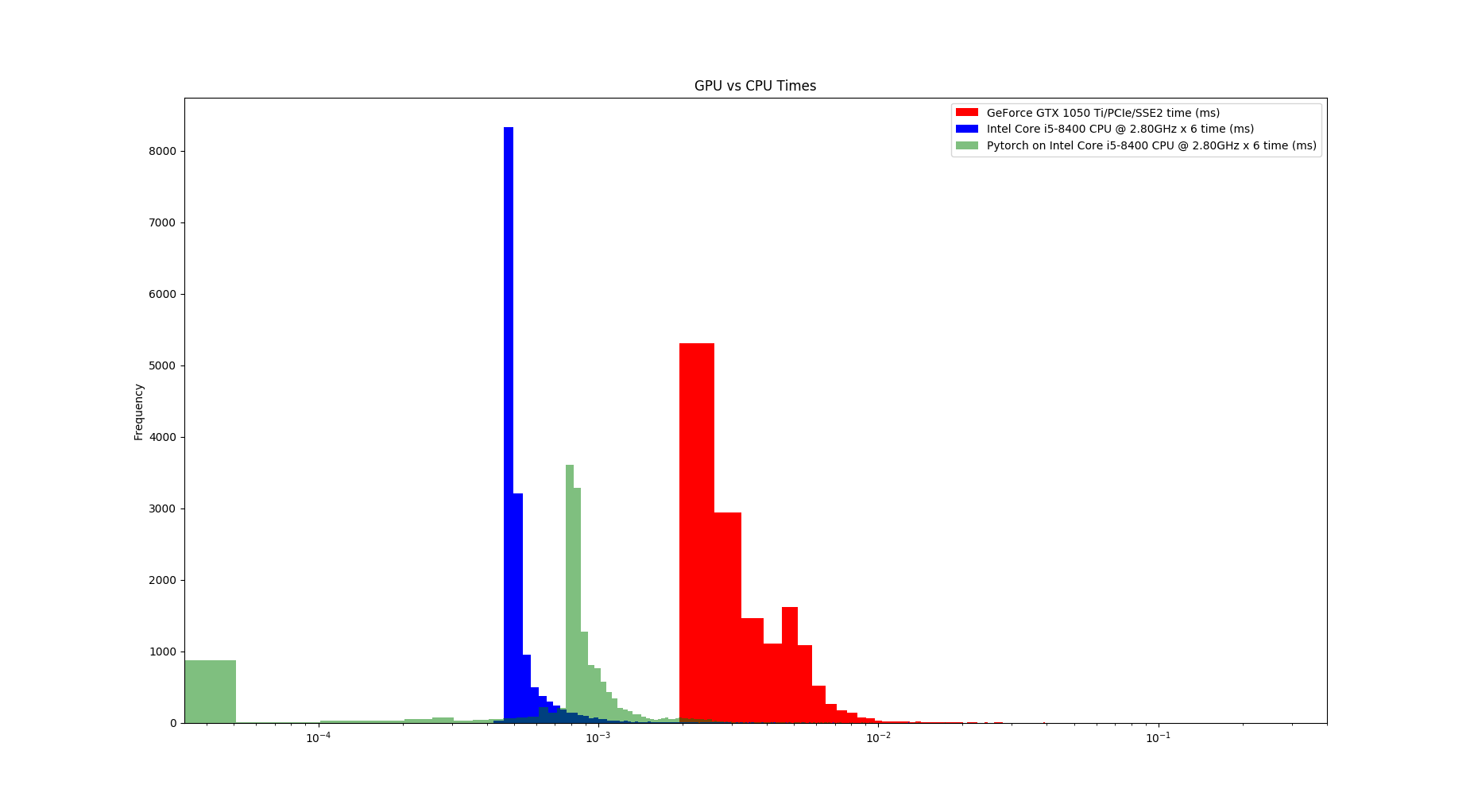

![QST] Is it faster to just run cuML rotuines on numpy arrays resident on CPUs?! · Issue #1304 · rapidsai/cuml · GitHub QST] Is it faster to just run cuML rotuines on numpy arrays resident on CPUs?! · Issue #1304 · rapidsai/cuml · GitHub](https://user-images.githubusercontent.com/7390549/67723978-e132e680-f99a-11e9-88ad-163b84bb6678.png)

QST] Is it faster to just run cuML rotuines on numpy arrays resident on CPUs?! · Issue #1304 · rapidsai/cuml · GitHub

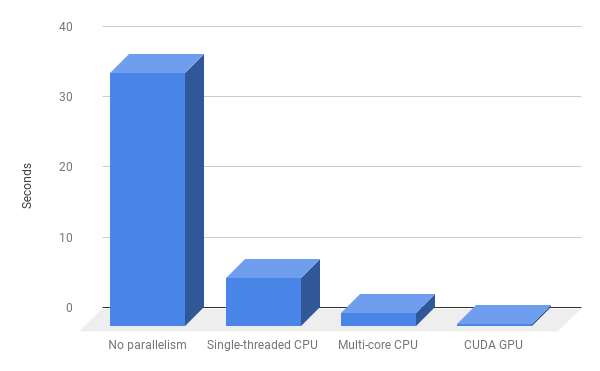

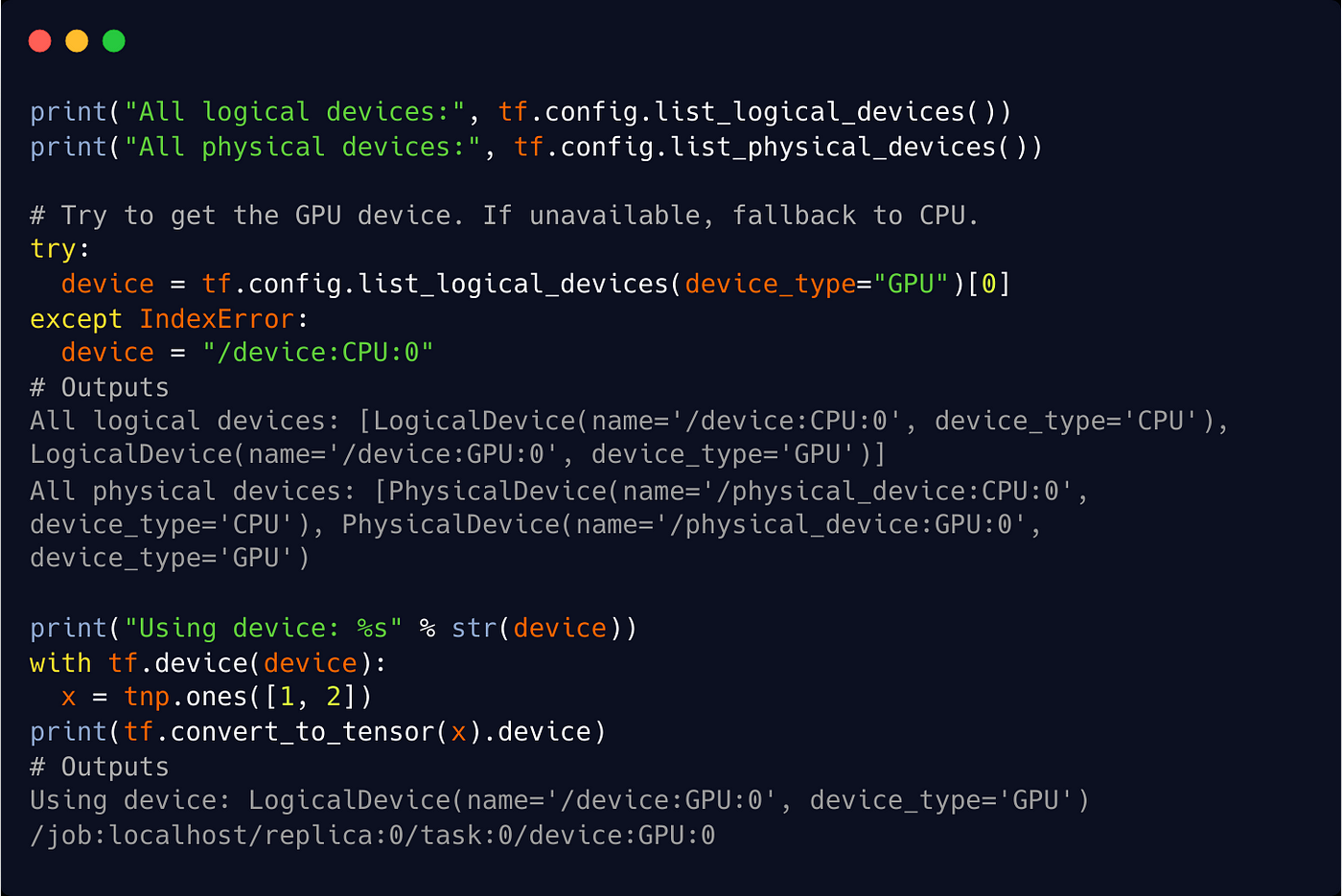

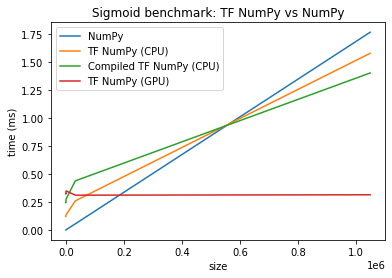

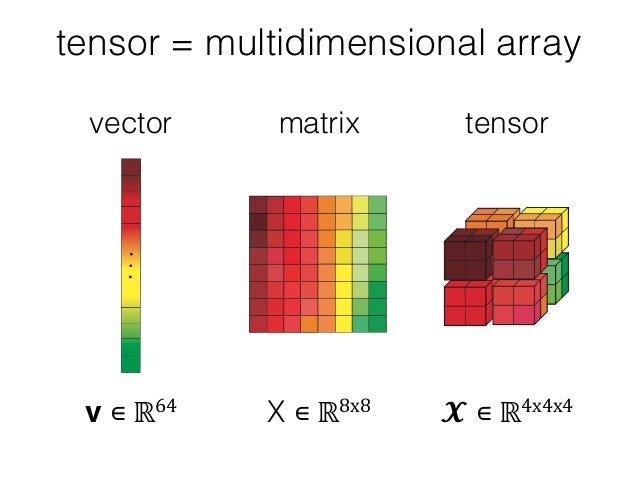

Numpy on GPU/TPU. Make your Numpy code to run 50x faster. | by Sambasivarao. K | Analytics Vidhya | Medium

Numpy on GPU/TPU. Make your Numpy code to run 50x faster. | by Sambasivarao. K | Analytics Vidhya | Medium

Numpy on GPU/TPU. Make your Numpy code to run 50x faster. | by Sambasivarao. K | Analytics Vidhya | Medium

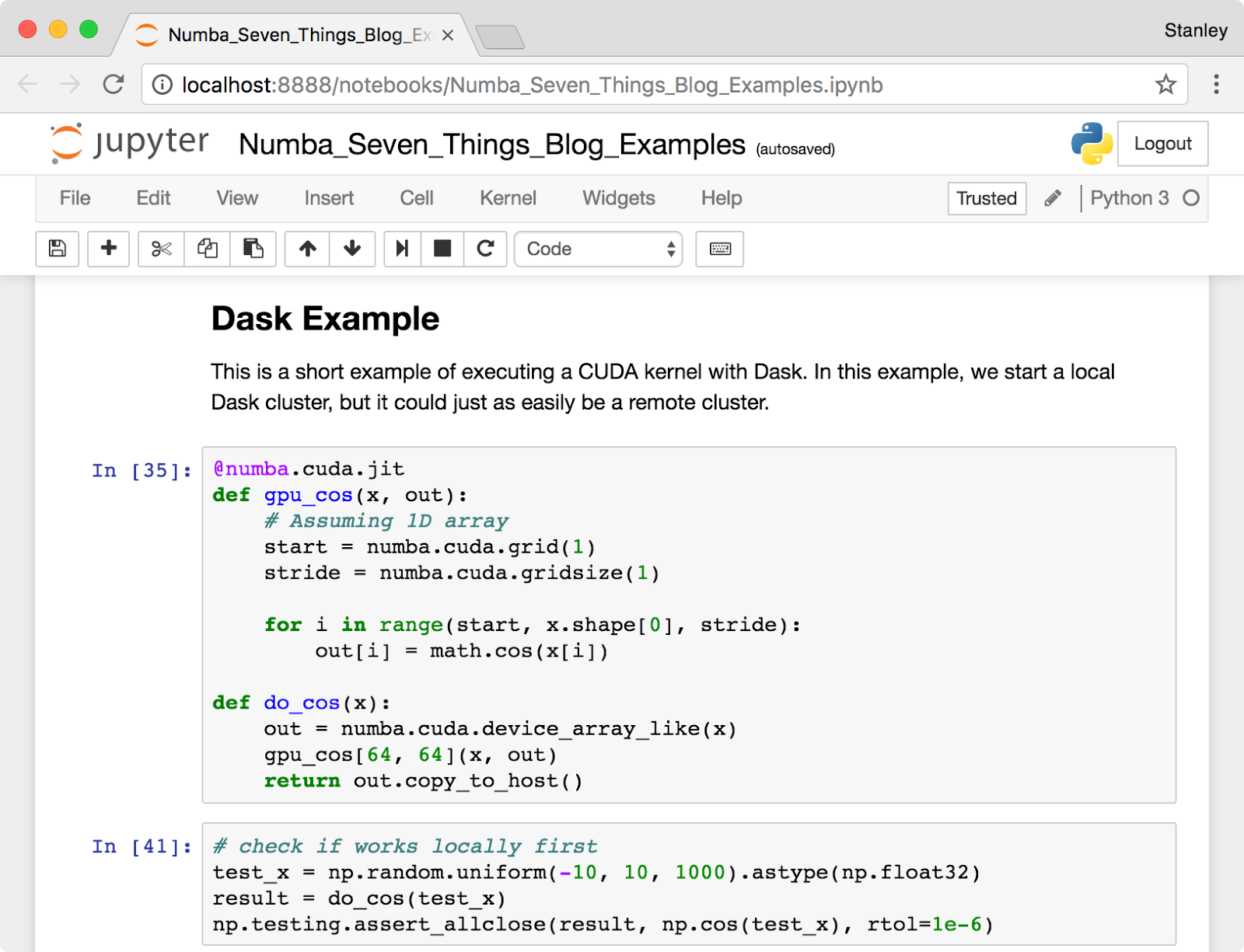

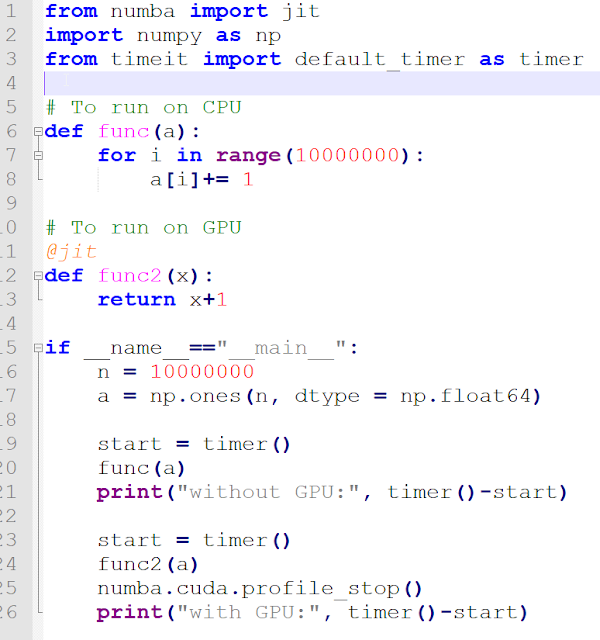

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium