Tensorflow Serving by creating and using Docker images | by Prathamesh Sarang | Becoming Human: Artificial Intelligence Magazine

TensorFlow serving on GPUs using Docker 19.03 needs gpus flag · Issue #1768 · tensorflow/serving · GitHub

Kubeflow Serving: Serve your TensorFlow ML models with CPU and GPU using Kubeflow on Kubernetes | by Ferdous Shourove | intelligentmachines | Medium

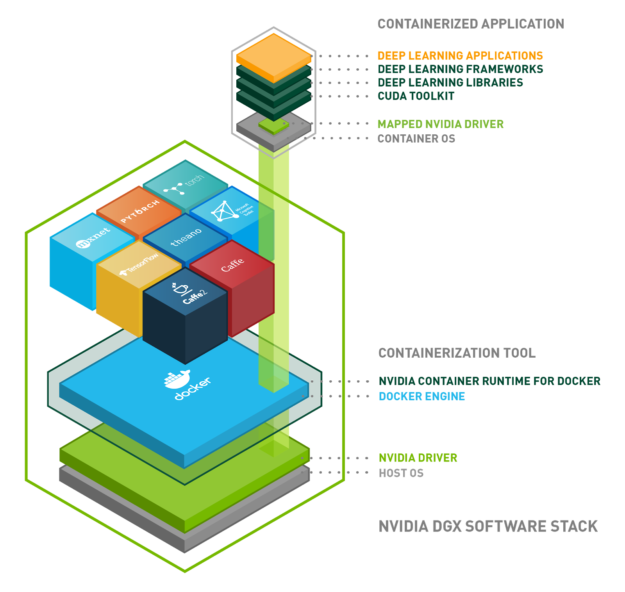

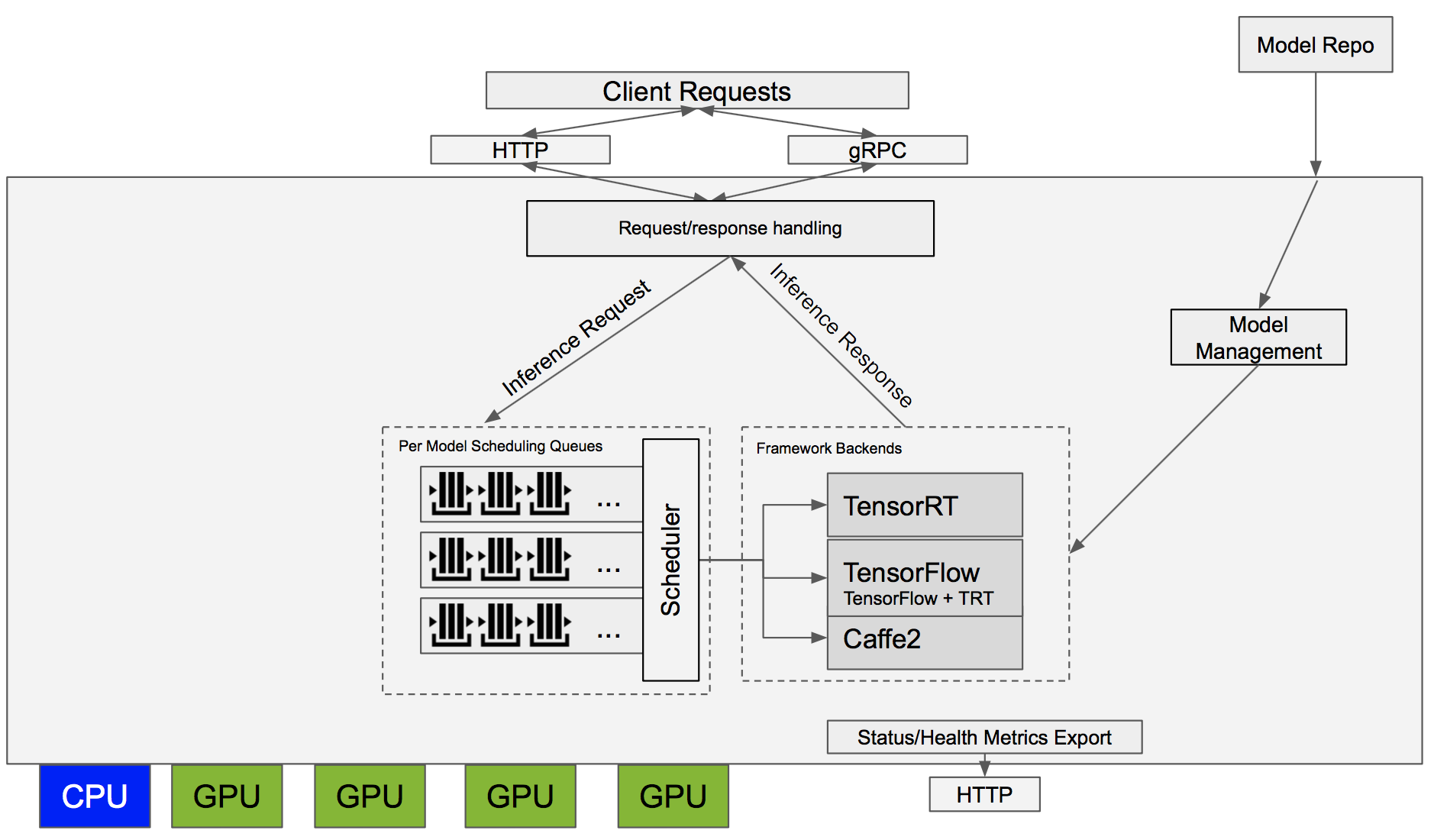

Optimizing TensorFlow Serving performance with NVIDIA TensorRT | by TensorFlow | TensorFlow | Medium

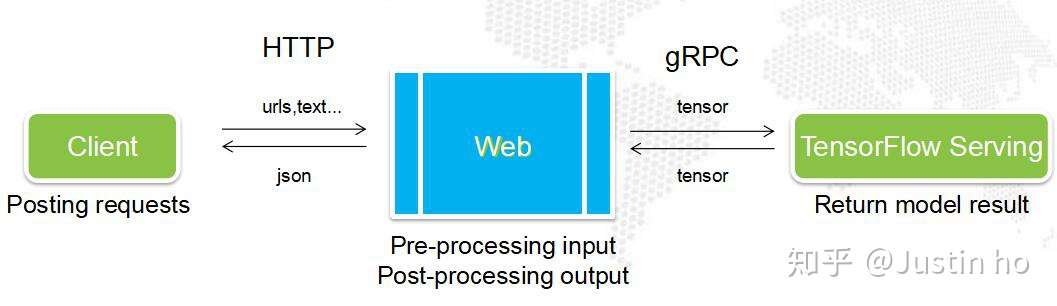

Reduce computer vision inference latency using gRPC with TensorFlow serving on Amazon SageMaker | AWS Machine Learning Blog

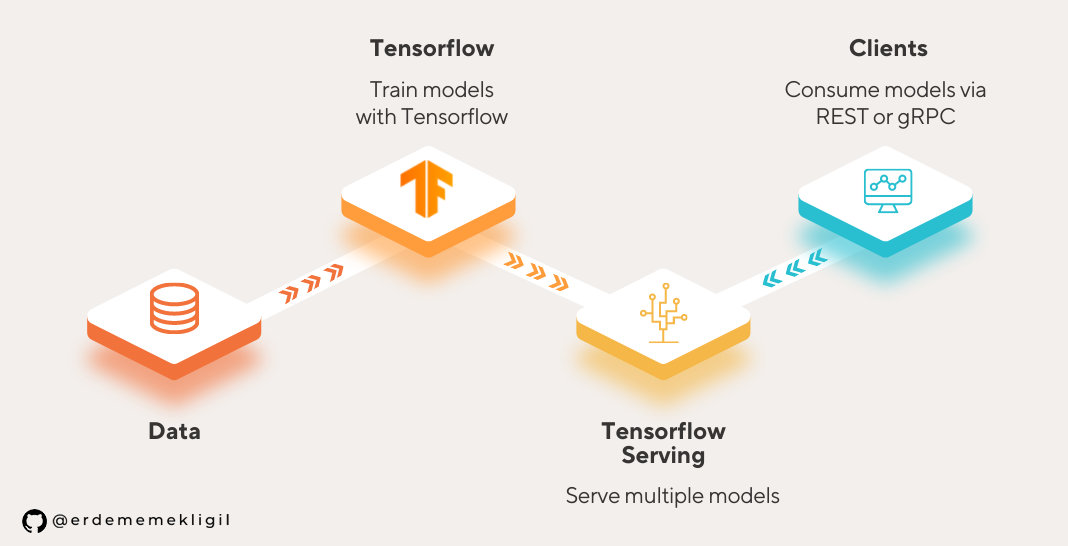

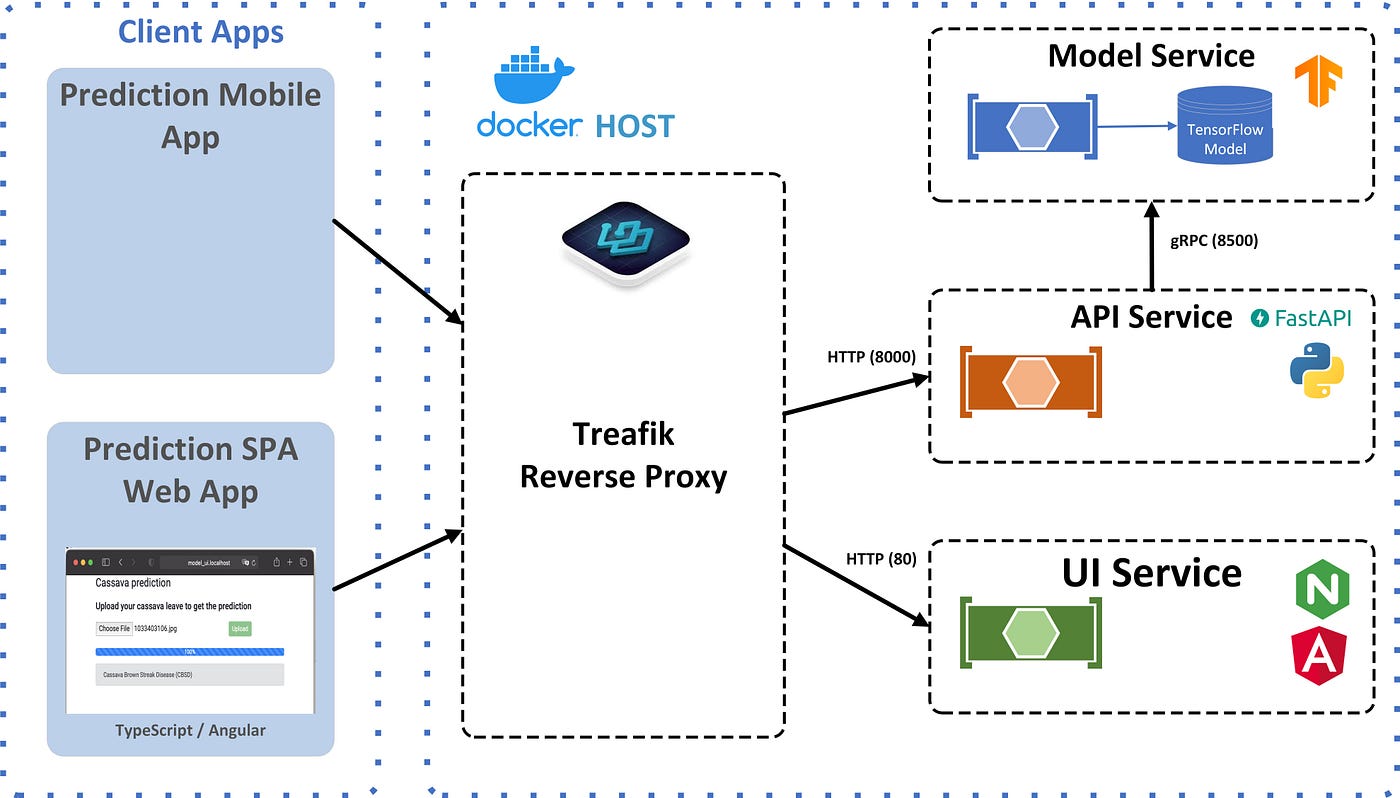

Serving an Image Classification Model with Tensorflow Serving | by Erdem Emekligil | Level Up Coding

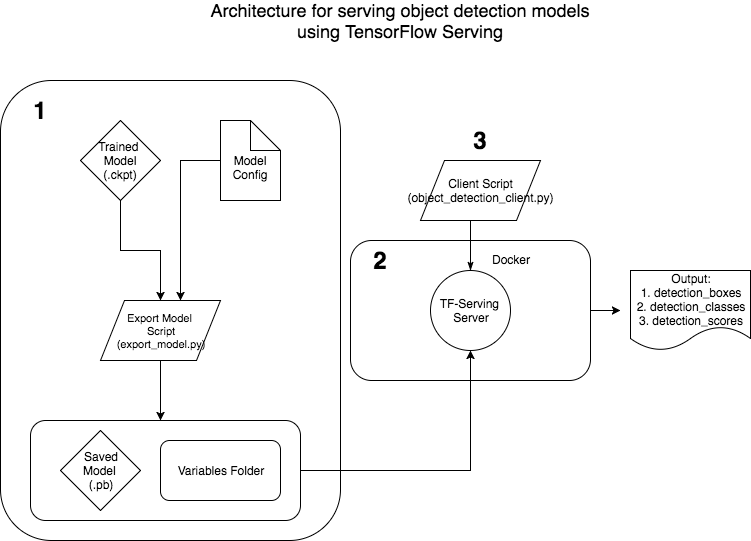

How To Deploy Your TensorFlow Model in a Production Environment | by Patrick Kalkman | Better Programming

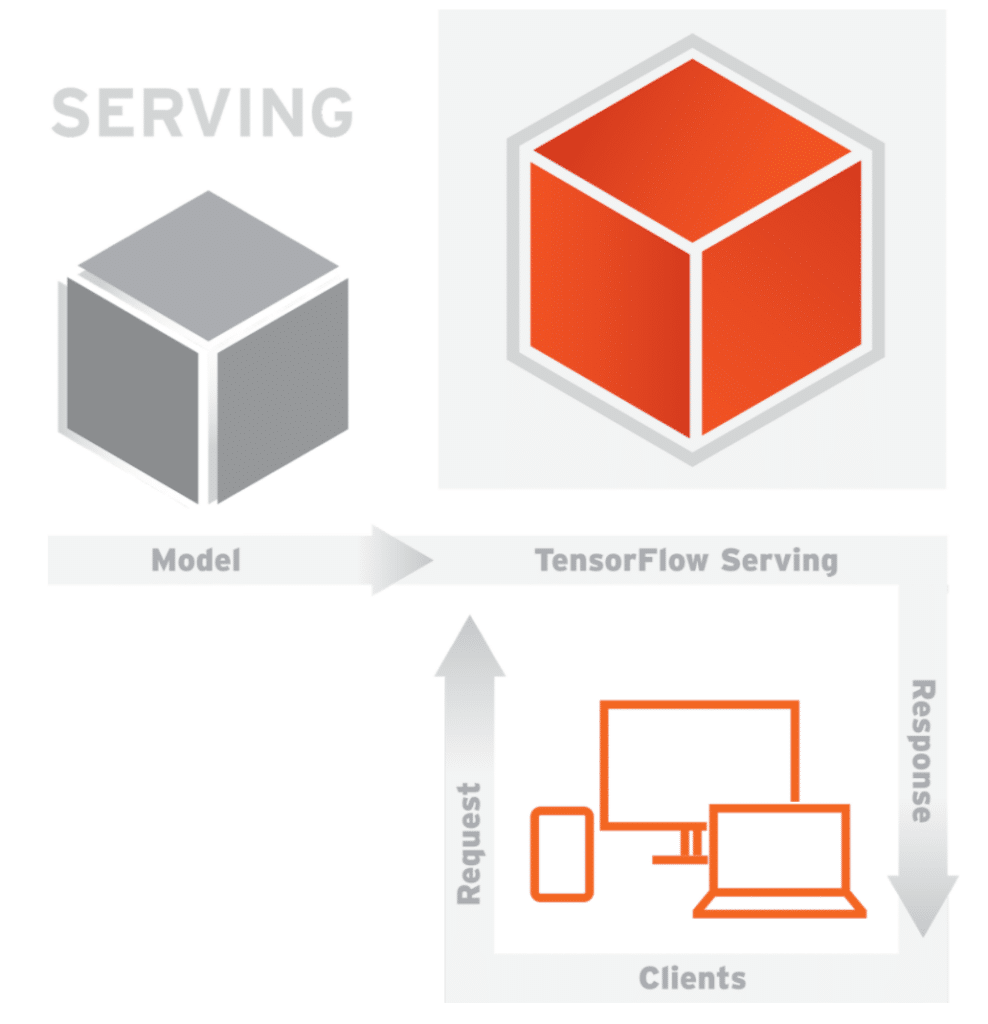

Deploy your machine learning models with tensorflow serving and kubernetes | by François Paupier | Towards Data Science

TF Serving -Auto Wrap your TF or Keras model & Deploy it with a production-grade GRPC Interface | by Alex Punnen | Better ML | Medium

.png)